Internet usage patterns and delivery of software applications are undergoing major paradigm shifts. Decentralization is the primary pattern – transitioning away from fixed network entry points, concentrated clusters of compute and single data stores. These changes are being driven by the rapid evolution of work habits, software architectures, connected devices and data generation. After years of Internet resource convergence, we are witnessing a shift towards the broader distribution of compute, data and network connectivity.

Software applications are pushing processing workloads and state outwards towards the end user. This transition began to lower response times for impatient humans, but will become a necessity to coordinate fleets of connected devices. With work from anywhere, network onramps cannot all be routed through central VPN entry points protected by firewalls. Distributed networks backed by dynamic routing will increasingly facilitate point-to-point connections between enterprise users, their productivity apps, corporate data centers and local offices. Massive troughs of raw IoT data have to be summarized near the point of creation before being shipped to permanent stores.

These changes are being driven by exogenous factors, reflecting the same bias towards decentralization. Workers are less likely to concentrate in large office campuses where their network connectivity can be protected by closets of security hardware. The proliferation of connected devices and high-bandwidth local wireless networks are creating new opportunities to streamline industrial processes and enable machine-to-machine coordination. Privacy concerns are prompting government regulations to keep user data within geographic boundaries. The convenience and efficiency of digital engagement are forcing enterprises to move consumer touchpoints onto virtual channels.

Overlaying these trends is an increasing need for security. While hackers have existed for years, the decentralization of defenses and migration away from physical engagement are creating new opportunities to exploit vulnerabilities as technology tries to catch up with consumer habits. Information sharing, corporate-like organization and untraceable payment systems are propelling the practice of hacking into a thriving business function. This has thrust digital security from the back of the enterprise to the front office, layering over every corporate activity. Digital transformation extends the same risks to the enterprise’s customers.

These forces are creating significant opportunities for nimble software, network and security providers. Entrenched technology companies are responding, but existing business incentives and fixed system architectures are creating inertia. Foundations in centralized compute infrastructure, big data stores and network hardware sales are difficult to evolve. Newer companies grounded in a distributed mindset are better positioned architecturally, commercially and culturally to address the new landscape. Focused independent players will carve out large portions of the growing market for distributed Internet services.

In this post, I explore these trends in network connectivity, application delivery and data distribution, and then link them back to the independent, forward-thinking public companies that are capitalizing on them. While many companies are lining up against these trends, I will try to limit my focus on the implications for a few high growth software and network infrastructure companies tracked on this blog. Specifically, these include Cloudflare, Zscaler and Fastly. I will also use this narrative to weave in updates on each company’s recent quarterly results, product developments and strategic moves.

What is Changing?

The fabric of the Internet is being impacted by how we work, interact with the world and manage our lives. Software delivery infrastructure and network architectures are evolving, to both meet these new demands from society and to increase developer productivity. Nefarious actors are on the move as well, organizing in new ways to exploit security lapses.

I will summarize the most significant change drivers below. As investors, these need to be appreciated and monitored. Their momentum has implications for the companies that provide network connectivity, software infrastructure and data storage. As with any rapid industry advance, providers face both new opportunities and risks. Some forward-thinking companies will flourish and slow responders will fade.

Work from Anywhere. Every business must accommodate the ability for the majority of their employees to work remotely. Whether they bring all employees back to the office or keep them all remote is inconsequential. Going forward, CIOs have to plan for the necessary network capacity and configuration to enable all employees to be remote. This is because COVID-19 taught CIOs that another exogenous event (like another pandemic) could force periods of work from home in the future. That possibility has forever changed the planning process.

The outcome is that a plan for 100% remote work, even some of the time, means that prior network architecture designs with controlled entry points (VPN), physical firewalls and one-time authentication will not be sufficiently scalable or secure. Before COVID, with remote work being the exception, a lower expectation for offsite service quality was accepted. Enterprise IT could get away with marginally functional performance through shared VPN and other network chokepoints. The argument was that employees could always drive to the office for a better connection. That is obviously no longer acceptable.

This change pushes CIO’s away from a network architecture designed around limited entry points (hub and spoke, castle and moat) to fully point-to-point connectivity. This allows an employee to connect directly to any enterprise resource from any point on the globe. That requirement favors a distributed network on on-ramps and off-ramps, with entry locations near every city and dynamically routable network paths to every other point.

Additionally, network connections need to be short-lived – they are created and authenticated for the duration of every request. This differs from the prior castle and moat approach of authenticating at a single entry point and then allowing extended access to all resources on the local network. An analogy would be checking a visitor’s ticket once at the entry gate to the amusement park for the day, versus verifying their ticket before every ride. The latter approach is called Zero Trust and is becoming the norm, as a requirement of a fully distributed workforce.

Connected Smart Devices (or Machine-to-Machine). I won’t just call this IoT, although that is an accurate label. I make a distinction because the simplest form of IoT is simple one-directional sensors that collect data and ship it back to a central store. Examples are temperature gauges or moisture sensors for agriculture, or location beacons for packages. While this use case is interesting from a data processing point of view, these devices don’t generate much demand for decision making, cross-device communication or coordination. They just write to a log of values and then periodically ship that data somewhere else for processing.

I think the more interesting opportunity involves any device with sensors, some processing capability and an external interface (display, robotic arms, motors). These IoT devices both monitor and take action. These devices will have logic and something they control in the physical world (and in the case of software agents, the virtual world too). These types of devices are often more effective when coordinated with other devices in a local area.

These devices can connect to a network through a high-speed wireless spectrum (like 5G). Next generation wireless networks offer more bandwidth and broader range, making it feasible to reach all devices within a large building or square kilometer. Network equipment providers are rapidly rolling out systems that allow enterprises to set up their own private 5G networks.

The emergence of new high-bandwidth wireless networks, like 5G, coupled with IoT and automation are driving a significant upgrade of traditional manufacturing and industrial processes. This movement is being dubbed Industry 4.0, which involves integration of industrial systems for increased automation, improved communication and self-monitoring. Smart machines can even analyze and diagnose issues without the need for human intervention.

Beyond our factories, we can look for examples of these types of devices in our homes, commercial buildings and cities. Having temperature sensors throughout a building or a city would generate a lot of data, but that only goes one-way. However, if all the traffic lights in a city were coordinated based on traffic load, that generates an order of magnitude higher demand for compute and data resources.

Another example could be found in our homes, where sensors, control devices and appliances are all connected and coordinated on a local network. Smart homes can be optimized for efficiency and convenience. They also offer new revenue models, as discussed by SAP below.

The reality is that as the cost of sensors comes down, we are designing smarter products and assets that have embedded intelligence and IIoT data points. This means that everything is “smart” and we are only limited by our imagination for use cases.

Let’s take an item like a washing machine. The manufacturer may decide to move to an as-a-service model where they don’t sell the washing machine but offer it as a service and generates a monthly bill based on how many loads of washing you do in a given month.

But to do this they have to be able to track several things. Firstly, you must track how many times the washing machine has been used, so that you can bill the customer. But you also want to make sure that the washing machine is working at an optimal level and isn’t about to break down in mid-wash. This requires a whole new set of sensors on key components of the machine that can be used to alert the manufacturer that the machine needs maintenance or in the worst case, needs to be replaced. If it breaks down everybody loses.

You may also position your product as the “sustainable washing machine” that can provide the customer information on their mobile device about how much electricity it is using, what its carbon footprint is, how much water it is using per wash and much more. Again, this would mean leveraging IIoT sensors to capture and share this information.

Richard Howells, VP, Solution Management for Digital Supply Chain at SAP, Protocol Newsletter

This difference between sensor-based IoT and actual coordinated clusters of smart devices has important implications for the future demand for distributed compute and data storage solutions, often referred to as edge compute. While the potential for IoT is very exciting, I would argue that one-way sensors don’t do much to push forward the need for edge compute resources. Systems of linked smart devices that coordinate to achieve a desired outcome definitely do.

Current use cases for edge compute revolve around speeding up application delivery. By moving compute and data closer to the end user in a distributed fashion across the globe, the user’s perception of application responsiveness will improve. This is certainly a worthwhile cause, as digital experiences become richer and consumer expectations for real-time response increase. The impact on edge compute demand will be linear, though, increasing with the number of users and apps.

The non-linear growth will happen when true machine-to-machine communication takes off, moving beyond single-purpose sensors to connected smart devices. To serve those purposes, the need for distributed compute resources and data storage will explode. A lot of Industry 4.0 workloads might be addressed by locating a mini-data center on the factory floor. That could provide a large portion of the local compute and data storage needed. However, I think an equally large amount of data services will be needed to distribute information and coordinate activity across these factories, and connect them to distribution hubs, transportation services and supply chains.

Another way to consider the potential for machine-to-machine workloads is to think about the current set of Internet “users”. These are primarily people. While mobile phones and other smart devices increased the time a human could engage with the Internet, user growth was still bound by the number of humans on the planet (let’s say increasing 1-2% a year). While many new digital channels are disrupting traditional business models, like e-commerce, video streaming, health care, etc., the ultimate growth of those businesses will be bound by the number of humans available to consume their services.

With autonomous machines, however, the growth potential is non-linear and is bound by device manufacturing. All of these devices will need endpoint protection and secure network connectivity. Monitoring and coordinating them will require new distributed application workloads, with global reach and geographic proximity. Autonomous software-driven control agents will also need to communicate with each other. This growth of machine-to-machine coordination will accelerate the demand for decentralized compute and data storage solutions. This is the potential for investors to harness.

As a simple example, if a temperature sensor recorded the temperature every minute, but the temperature stayed the same for an hour, those 60 entries would not be very useful. Yet, the network transmission cost to ship 60 entries, versus just one summary entry, would be much higher. This reality creates a benefit for regional or local processing at some point between the device and the central processing store. Compute could be located in regional clusters that collects the raw log data and processes it for patterns. The summary data would then be sent to the central repository for further examination.

Data Tsunami. As more data is created by more connected devices across the globe, data pre-processing near the network entry point becomes a requirement. This is because large volumes of log data can be expensive to ship all the way back to a central processing cluster in raw form. Orchestrated systems will be sensitive to latency and benefit from local or regional processing to enable faster decisions and greater resiliency.

This localized data processing capability can be facilitated by edge compute resources. These might execute basic logic to compute averages and deviations, or be enhanced by ML/AI techniques. Edge compute providers would need to support a development environment, some local data storage for caching and a fast runtime. These runtimes would also need to be isolated from other tenants and secure.

Digital Transformation. While it can have a number of implications, digital transformation represents the enterprise process of creating new sales, service and marketing experiences on digital channels to supplement (or eventual replace) physical interactions. Each new digital experience creates incremental demand for software infrastructure. While this migration from offline to online was occurring pre-COVID, the pandemic and social distancing accelerated the process.

I expect this trend to continue. After being forced to utilize digital channels during the pandemic, most consumers have realized that it is more efficient to perform their daily tasks online. Going forward, they will expect to have digital channels available from every company selling them a good or providing a service. If enterprises successfully avoided digital adoption previously, COVID will push them over the edge. Additionally, as one company in an industry creates a better digital experience, their competitors are expected to keep up. This drives an ongoing cycle of one-upmanship.

A good example exists in the restaurant industry. Chipotle now anticipates up to half of all sales to occur online. Even after COVID, they expect this to persist as customers value the time savings and convenience of just picking up their food in the store versus standing in line to order it. They even recently started rolling out digital-only kitchens in heavily populated areas, which completely removes the human interaction. Other chains are building online experiences as well, like Panara, Starbucks, McDonalds, Chick-fil-A, Chili’s, etc.

Digital transformation is also being applied towards making employees more efficient. A good example is new Twilio’s Frontline product and its adoption by Nike. Frontline provides a mobile application for employees that connects them directly to customers via SMS, WhatsApp or voice. While the app is installed on the employee’s mobile device, the login, messaging and phone number are tied to their company’s identity. This allows the workers to have the convenience of their own mobile device, without having to rely on their personal phone number. This release was highlighted during Twilio’s Signal user event last year, as part of a keynote with Nike’s CEO. For Nike, this allows the company to service customers outside of a store environment.

Nike just announced impressive Q4 results. Overall revenue grew by 21% over Q4 2019 (better comparison than year/year due to COVID). Their digital channel, Nike Digital, grew 147% over the same period. They intend to continue this investment in the digital business going forward.

“FY21 was a pivotal year for Nike as we brought our Consumer Direct Acceleration strategy to life across the marketplace,” John Donahoe, President & CEO of Nike said. Now approaching 40 percent, Nike aims for direct sales to represent 60 percent of its business by 2025, with the share of digital direct sales expected to double from 21 to around 40 percent. Earlier this year, Nike’s largest competitor Adidas had announced a similar initiative called “Own the Game”, under which the German sportswear giant plans to reach 50 percent direct-to-consumer sales by 2025. This will include investments of more than €1B in digital transformation.

This direct to consumer trend from major manufacturers obviously has huge implications for digital channel growth. That is because they are cutting out distribution through a physical retail intermediary. By moving their distribution to direct consumer sales, they dramatically increase their reliance on software delivery infrastructure to facilitate the consumer’s ability to browse products, place orders, receive delivery and get customer service.

The implications for compute, storage and networking providers are significant. Moving more consumer experiences to digital channels will require additional software infrastructure, services and delivery consumption. The desire for performance will push some of this compute and data out to the edge. On the employee side, more productivity applications will increase requirements for secure network connectivity. When employees utilize a productivity application on their mobile device, tablet or laptop, they might not be on the corporate network. This means a secure, point-to-point network connection would be established (ideally) between their device and the enterprise application (which may be SaaS, on the cloud or located in a corporate data center). If the employee’s connection were routed through a VPN, it would create more load on that network chokepoint and result in a degraded user experience.

Decoupling Applications. Even before edge computing emerged, developers were breaking up large applications into smaller, independent components. The original Internet applications were monoliths – all code for the application was part of a single codebase and distribution. It executed in one runtime, usually a web server in a data center. Monoliths generally connected to a single database to read and write data for the application.

As applications and engineering teams grew to “web scale”, this approach to software application architecture became more difficult to maintain. With all code shared in a single codebase, isolating changes across a team of tens or hundreds of engineers wasn’t feasible. Test cycles were elongated as QA teams ran through hundreds of regressions tests. The DevOps team couldn’t optimize the production server hardware to match the specific workload demands of the application.

These constraints of a large monolithic codebase sparked the advent of micro-services. A micro-services architecture involves breaking the monolith into functional parts, each with its own smaller, self-contained codebase, hosting environment and release cycle. In a typical Internet application, like an e-commerce app, functionality like user profile management, product search, check-out and communications can all be separate micro-services. These communicate with each other through open API interfaces, often over HTTP.

Micro-services address many of the problems with monolithic applications. The codebase for each service is isolated, reducing the potential for conflicts between developers as they work through changes required to deliver the features for a particular dev cycle. Micro-services also allow the hosting environment to be tailored to the unique runtime characteristics for that part of the application. The team can choose a different programming language, supporting framework, application server and server hardware footprint to best meet the requirements for their micro-service. Some teams select high performance languages with heavy compute and memory runtimes to address a workload like user authentication or search. Other services with heavier I/O dependencies could utilize a language that favored callbacks and developer productivity.

Further up the stack, Docker and Kubernetes allow DevOps personal to create a portable package of their runtime environment. All of the components needed by their application, app server, database, cache, queues, etc., can be codified and then recreated on another hosting environment. This represented a major improvement to the decoupling and reproducibility of software runtimes. It also helped plant the seeds for further decentralization. No longer were production runtime environments constrained to a physical data center that SysAdmins needed to set up and tune by hand.

Finally, the emergence of content delivery networks (CDNs) gave developers the ability to cache components of a web experience that were slow to transmit over the network. These might include large files like images, scripts and even some application data. These caches were located in PoPs that CDN providers distributed across the globe. The benefit of using a CDN was primarily seen in the performance of the web application. Load times came down significantly.

However, CDNs provided developers with a side benefit of decentralization of application architecture. It provided a pattern for distributing application content across the Internet. It also forced models for cache management. These patterns for CDN use apply to distributed data caching and requirements for consistency.

This is to say that developers are already familiar with the idea of distributing their software application components and data across the network. They can rationalize keeping some parts of the application at a centralized core, where scale keeps costs down. Other parts of the application and data can be pushed outwards to users, running in parallel on a distributed network of regional PoPs.

Serverless. Continuing the progression of application decoupling, DevOps teams realized that maintaining large tiers of servers waiting on user requests wasn’t an efficient use of resources. Some parts of the application were only triggered by a separate user action and the output wasn’t something the user needed to monitor. These tended to be asynchronous jobs like sending a confirmation email or writing to an analytics data store.

Because these functions are asynchronous, the serverless runtime could be spun up slowly (referred to as code start time). Some more progressive CDN companies realized that they could adopt a serverless model to allow developers to run code on their geographically distributed network of PoPs. They created optimized runtimes that could start executing code in a few milliseconds or less. This change enabled serverless runtimes to be used for synchronous processing, responding in near real-time to every user request.

This change turned centralized application processing on its head. By allowing any part of a web application to run in a serverless environment, developers could consider moving many functions closer to application users. These new distributed serverless environments could run the same copy of code in parallel across a global network of PoPs. A user connecting from Germany would be served from a PoP in Europe, while a U.S. user would hit the PoP closest to their city. In both cases, the code run would be the same.

This model is easiest to apply to parts of the application that are stateless. For these cases, the serverless function stores no data or utilizes a local cache that persists for the duration of the user’s session. Stateless functions include things like application routing decisions or an A/B test. Short-lived cached data might represent a shopping cart for an e-commerce application or an authentication token after login. The benefit was that these functions could provide faster response times to the user, resulting in a much shorter application load.

By design, these serverless providers also supported a multi-tenant deployment. CDN providers with multi-tenant distributed compute could execute code for many customers in parallel within each PoP. Because the runtimes for serverless code are isolated from other processes, they could enforce security measures to ensure no data leakage or snooping.

These capabilities supported further breaking up the monolithic application, allowing some functions to be relocated to runtime environments closer to the end user (the edge of the network). Because developers were already versed in centralized serverless environments provided by cloud vendors, this new model of distributed serverless delivery was a logical extension. In addition, they had already conceptualized the use of a global network of PoPs through their experience with CDNs.

Finally, the software frameworks that developers use to create their applications are abstracting away some of the distribution complexity, particularly around state management and caching. Popular frameworks are being updated to account for new delivery paradigms, like edge compute. As an example, the React JavaScript framework is popular with developers for building modern web applications. It facilitates the creation of the client-side application and data management with the back-end. Recently, a new open source project, Flareact, was released for Cloudflare’s edge compute platform. The project owner updated React with new components specifically to support edge deployments. As edge compute becomes more popular, we can expect to see more improvements to common software development frameworks that make distributed application development easier for all levels of engineer.

Data Localization. Regulations are increasing from countries worldwide for data sovereignty. Several entities, like the EU, China, Brazil and India, already have controls in place, or are considering them. As privacy concerns proliferate, we can expect this kind of oversight to increase.

These data sovereignty requirements generally dictate that the data for a country’s citizens be processed and stored locally within that country’s borders. This requirement poses a problem for centralized application hosting models. They would need to have a presence in each country and a mechanism for keeping the data local.

However, if the application hosting model is distributed across PoPs in every country, the provider could enforce logic that services the user’s request within that country’s borders and maintains only a local copy of their private data. This model is easily supported by the CDN providers, who started with a distributed network of multi-tenant PoPs (mini data centers), connected by a software-defined network. As examples, Cloudflare and Akamai have PoPs in over 100 countries.

Identity and Privacy. Speaking of privacy, the Internet has a huge problem with identity and duplication of sensitive user data. Every enterprise application that a consumer interacts with wants their own copy of the user’s identity and metadata. This can include personally identifiable information, like name, phone number, address, email, etc. If the enterprise experiences a security breach, the consumer’s information is compromised. The likelihood of compromise for any consumer is high because their data is copied in so many places.

Additionally, some enterprises will create new data about a consumer, which they have no control over. If there is a mistake (like a credit history), the consumer may not know about it. The consumer may only want to share the data that is necessary for the application to function. In some cases, like on social networks, they prefer pseudonyms to protect their privacy. One user’s identity may be loosely associated with multiple personas with their real-world details kept private.

The current system of user identity would be much improved if the consumer had a method of maintaining their own encrypted store of personal data which could be shared with applications as appropriate for each use. The consumer could review their personal data to ensure it is accurate and up-to-date. With the right protocols, applications wouldn’t need to permanently store a user’s personal data. Rather, they might maintain an anonymized reference ID to that user, and then load the data from the user’s private store during an actual engagement. This is already how some payment systems operate.

Security Overlays It All

All of these trends towards decentralization make securing the underlying systems more challenging. Digital transformation, work from anywhere, IoT, big data, micro-services, all create new attack surfaces. This opportunity is being exploited by what seems to be increasingly well-organized hacker syndicates. Cyber attacks are more prevalent with high visibility impact, as we have seen recently with news reports on SolarWinds, Colonial Pipeline and JBS.

The number of breaches is expanding proportionally to the increase in attack surfaces. To quantify this, Zscaler published an “Exposed” report in June, which provided details on the significant increase in vulnerable enterprise systems over the prior year. This was driven by enterprise response to COVID-19, where companies offered more remote work options for employees, relocated physical experiences on digital channels for their customers and migrated application workloads to the cloud.

Some details from the report were:

- The report analyzed the attack surface of 1,500 companies, uncovering more than 202,000 Common Vulnerabilities and Exposures (CVEs), 49% of those being classified as “Critical” or “High” severity.

- The report found nearly 400,000 servers exposed and discoverable over the internet for these 1,500 companies, with 47% of supported protocols being outdated and vulnerable.

- Public clouds posed a particular risk of exposure, with over 60,500 exposed instances across Amazon Web Services (AWS), Microsoft Azure Cloud and Google Cloud Platform (GCP).

It probably goes without saying, but as we consider solutions to meet new demands for decentralized configurations, security capabilities have to be evaluated as well. A technology provider must produce evidence that they not only offer a distributed service, but that they can keep an enterprise’s employees, devices and data secure in the process. Security capabilities go hand-in-hand with broader distribution of technology services.

Implications for Technology Providers

As the changes that I listed above continue to progress, they create demand for new approaches in several technology categories. Because this is fertile ground, technology providers who move quickly and design for these trends natively stand to benefit the most. The scope and velocity of these changes is creating a large market opportunity. While legacy technology providers and the cloud hyperscalers will likely capture some share, there will still be plenty of growth for smaller, independent players.

I have written in the past about why I think that in many categories of software infrastructure, security and networking that independent providers can outperform the large technology vendors and hyperscalers. Advantages revolve around their cloud agnostic posture, focus, talent and product release velocity. This continues to be the case today, and will likely be increasingly magnified going forward, as the technology landscape becomes more complex. This explains why independent companies increasingly dominate a number of service categories, like Twilio in CPaaS, Datadog in observability and Okta in identity. Even software service categories closer to the core of cloud computing, like data warehousing and databases, are experiencing encroachment from newer entrants like Snowflake and MongoDB (who incidentally run on the hyperscaler’s compute and storage infrastructure).

This isn’t to say that cloud vendors won’t field competitive solutions in new categories like edge computing and network security. However, by their very nature, self-interest, size and inertia, they will be slow to respond or leave large gaps for independent providers to exploit. Additionally, secular changes often provide a tailwind for all market participants.

The changes discussed earlier in remote work, consumer preferences and software delivery modes will impact four categories of technology services. I will discuss each below, focusing on what is changing and the requirements to address the new approaches. I will later tie these requirements to the product offerings and platform infrastructure of three leading, independent providers, who I think stand to benefit the most. The companies are Cloudflare (NET), Zscaler (ZS) and Fastly (FSLY). I currently or previously owned shares in each of these companies in my personal portfolio.

Network Connectivity

What is the Change?

Enterprise network connectivity is undergoing two major shifts, both of which represent a move from centralized routing and access control to point-to-point connectivity (SASE) and single use authentication (Zero Trust). These changes are being driven by the remote workforce, the proliferation of user devices and heavy use of SaaS applications for critical enterprise functions. When all employees worked in a corporate office on their company-issued desktop and all their productivity applications were located in the company’s data center, it was straightforward to connect these resources on a single corporate network and protect that from the public Internet with firewalls. For the occasional case of an outside party needing access to the corporate network, a VPN provided the controlled connection method.

However, as the majority of network users and enterprise software applications (SaaS) move outside the corporate perimeter, it becomes increasingly difficult to maintain a scalable firewall/VPN configuration. The stopgap measure was to force users to connect to the VPN in order to then be connected back to a SaaS application out on the Internet. This allowed the enterprise to control access and monitor traffic, but caused serious lag for users and capacity constraints for VPN hardware.

A better solution is to decentralize access by extending the whole network plane across the Internet. This means establishing network onramps near every population cluster and SaaS hosting facility globally. These onramps represent small data centers (PoPs) with clusters of servers and multiple high-throughput network connections to the Internet backbone. These PoPs are all interconnected by a secure private network, in which routing is defined by software (SDN). A software-defined network (SDN) allows the route to be changed for every request. SDN contrasts with the older approach of maintaining fixed routes that are only updated by a configuration commit on the physical equipment.

A point-to-point model (through PoP to PoP connections) obviates the prior approach of having a single entry point for corporate traffic ingress and egress. The benefit of the single controlled entry point was that the user could be authenticated once and then granted access to all resources on the corporate network. As hackers became more sophisticated, this method of authenticating once for a golden ticket provided a useful way to access many corporate resources through a single exploit. Once they gained access to the corporate network, hackers could “move laterally” to attack every resource.

Given this risk, security teams and providers began adopting a “Zero Trust” posture. In simple terms, this means that the user has to authenticate to every corporate resource separately on every interaction. This replaced the prior approach of authenticating once and then having access to all resources as long as the user session was active. Zero Trust significantly reduced the hacker’s ability to move laterally around the corporate network.

Point-to-point network connectivity and Zero Trust naturally work together. If a user’s device can connect directly to every resource, then that connection would have to be authenticated on each attempt. Forward-thinking providers realized this and stitched the two together. They built a global, intertwined network of PoPs and layered access controls on top of it. This way, a user anywhere on the globe could be routed to the closest PoP and authenticated to the resource they wanted to access. To manage this, the user would run a software agent on their device. Enterprise applications could similarly be obfuscated from public view and third-party SaaS applications could only be reached through controlled connections.

The CEO of Zscaler summarized the new demand environment as “The Internet is the new corporate network, and the cloud is the new center of gravity for applications.” This underscores the outcome of applying Zero Trust practices to a SASE network. With those services layered over the Internet, it is feasible for enterprises to treat the Internet like a private network. This new approach replaces years of capital spend on fixed network perimeters and physical devices to protect them.

What does a provider need?

In order to support a SASE network configuration with Zero Trust access control, a provider needs to have several capabilities.

- Network of entry points (PoPs). A provider should have owned and operated PoPs distributed globally, located in proximity to major population centers. Each PoP contains a concentrated cluster of high-performance servers with multiple network connections to the Internet backbone. In theory, the function of a PoP could be deployed on top of infrastructure maintained by cloud vendors, and some providers have tried this approach to fast-track the creation of a global PoP network. However, this tactic will ultimately be less effective. The provider will be limited by control over the full network stack (SDN), flexibility to customize hardware and geographic proximity.

- Software defined networking (SDN). Software defined networking provides the ability to dynamically and programmatically control the configuration of the network and the routing of traffic through it. This yields two benefits. First, the PoP can determine the routing for each request in real-time. This might be used to find an optimal path to the destination based on congestion or for certain types of content, like video, to ensure high availability. The second benefit is security related. The system can monitor for nefarious incoming traffic patterns, like a DDOS or bot attack, and re-route or blackhole that traffic.

- Traffic inspection. As network data packets flow through the PoP, it needs the ability to inspect them. This requires a lot of compute power as each data packet must be parsed, looking for pre-defined patterns. As most Internet traffic is encrypted, this examination needs to include SSL inspection. Decryption adds to the compute load. Traffic inspection gives the network provider the ability to identify malicious code or sense denial of service attempts.

- Threat monitoring. Foundational to handling all of an enterprise’s network traffic is the ability to maintain security. Fortunately, with all that traffic flowing through their PoPs and the ability to examine it, distributed network providers are in a very favorable position. They have visibility into a huge amount of activity on the Internet, aggregated across many enterprises. They get a first look at nefarious behavior and unusual patterns of activity. These can be quickly examined by a security-minded team to create new patterns to monitor. Similarly, AI/ML routines can be overlaid on the network data to automatically flag potential security threats. These threat identifiers can then be fed back into the real-time monitoring system. Of course, with a software defined network, the system can immediately take action to block or blackhole the activity. Or, if the threat isn’t 100% verified, the system could require an additional user action, like issuing a warning about a download or asking for further authentication.

Application Processing and Runtime

What is the Change?

Digital transformation, IoT and big data creation are increasing the demands placed on software delivery infrastructure. Consumers expect feature-rich, responsive digital experiences from enterprises. In the early days of the Internet, hosting an applications’s full runtime and data in one geographic location to serve the globe was marginally acceptable. For today’s global software services, that is no long the case, as latency impinges on usability.

By placing an application’s resources closer to the end user, transit times are reduced. This was the genesis for CDN’s in the early 2000’s for web content. But, early CDN’s were limited to static content, not dynamic processing. With IoT, clusters of connected smart devices will operate more efficiently if the application coordinating them is running in close geographic proximity. The shipment of large volumes of raw sensor data to a central location for processing is inefficient and costly. That data can be processed locally and the summary can be forwarded.

These factors all favor distribution of compute resources into regional locations across the globe. If an application can be subdivided into services and the most latency sensitive ones can run close to the user, then lag can be minimized and data transit costs will be reduced. This is made possible by distributing compute, in addition to content, to inter-networked data centers (PoPs) spread across the globe. Each PoP can maintain a copy of the application’s code and the ability to instantiate a runtime quickly in response to each user’s request. This code would run independently of the rest of the application, communicating with other services when necessary through open network protocols.

In order to prevent application owners from needing to run their own hardware in each PoP, the runtimes would be serverless and multi-tenant. Each runtime would be isolated from the others, without sharing resources like memory. Developers could address these environments from a single instance, and have their code duplicated globally on the click of a button.

What does a Provider Need?

- Serverless, multi-tenant runtime environment. In order for a provider to execute customer code in a distributed fashion across a network of PoPs, the runtime really has to support multi-tenancy. It wouldn’t be scalable to dedicate compute resources to each customer. This means that customer code is loaded into an isolated runtime environment on every user request. That runtime does not share memory space with any other process on the CPU. Similarly, the runtime must be serverless, or else it wouldn’t scale (the provider can’t keep a server running continuously for each customer). Running code for many customers in parallel within the same set of physical servers, duplicated across tens or hundreds of PoPs, is not a trivial undertaking. It requires sophisticated capabilities that only a handful of companies can address. The capital investment to deploy this kind of configuration is significant, creating a barrier to entry and hence a moat. While a provider could in theory spin up clusters of servers on hyperscaler data centers to emulate a PoP, they would never match the performance or efficiency of a provider that owns and operates their PoPs.

- Isolation. The risk for a multi-tenant provider is that a hacker finds a way to snoop on the application data of another customer’s runtime. In order to prevent this, the provider needs to design the runtime such that each customer process is fully isolated. This means that no memory can be shared and all data residue must be purged from processor caches before the next customer request is started. Also, the provider should employ sophisticated monitoring to look out for activity indicative of a snooping attempt. Usually, this takes the form of unusual memory scans or repeated runs of certain type of code that reveals characteristics of the CPU. Providers can monitor for this activity and block customers that engage in this behavior.

- Code distribution. A provider needs the ability for customers to upload code into the distributed runtime environment and have that be disseminated automatically to all PoPs. Ideally, updates would be rolled out very quickly, so that a customer can address critical fixes in near real-time. Additionally, customers would need the ability to access a test environment and promote code from one environment to another.

- Observability. The customer will want to understand how their code performs in the distributed runtime environment. They will want to be able to measure speed of execution and identify any bottlenecks (APM). Also, errors should be logged and surfaced for the customer, either through a real-time interface, or integrated with a log analysis tool. Ideally, the monitoring is aware of the execution path across all services within the application, allowing the customer to identify problem areas. Integration with other popular cloud-based observability tools (like Datadog) is useful here.

Data Distribution

What is the Change?

As application logic moves away from centralized data centers to locations closer to the user, the breadth of addressable use cases expands if data can be stored locally. There are some use cases that run at the network edge which are stateless. These include simple routing rules, API request stitching and logic gates, like an A/B test. In these cases, there isn’t a need to access persisted user-specific data. While these use cases provide a performance benefit to the application by relocating to the network edge, they account for a small percentage of an application’s functionality.

A broader set of use cases is available when user data can be stored across requests. The first level of progression is a cache into which user data can be stored during a user’s session. The cache will generally be localized to the PoP that the user is currently connected and will be purged after some period of time. A simple cache does open up another slice of use cases, like authentication tokens, session data, shopping carts and content preferences. This type of data can be pulled from the central location at the start of a user’s session. Or, it can be compiled over the course of the session. In either case, with cached data available for the application at the local PoP, the user’s perceived response will be much faster.

A bigger benefit occurs when an application is coordinating activity across multiple connected devices in a geographic area, like all the traffic lights and flow sensors on a city’s traffic grid. For this case, having data available to the application in a PoP near that city would make a huge improvement in performance, versus shipping every data update and logic decision to a central data center on the other side of the continent.

This clustering proximity benefit can apply to human users as well. If multiple users in a geographic location are interacting with the same application at once, then caching application data within a local PoP will improve responsiveness. This could apply to the experience for a multi-player game with geographic context (like Pokeman Go) or a collaboration tool (like a shared document). On the industrial side, clusters of human operators, like in a hospital, retail location or construction site would see a benefit.

The next stage of data distribution is to store user data across sessions. This starts to lend permanence to it. In this case, the provider has to think more broadly about security of the data and capacity. They would need to set expectations with application hosting customers about how durable the data can be and whether they still need to write back to a central store after each user session in order to ensure no data loss. As the data storage capacity at each PoP is presumably finite (central data centers can manage potentially infinite capacity more easily), the provider would need to establish policies to manage data usage across customers and consider how long they need to retain a customer’s data in each location.

The final solution for fully decentralized data stores is to support full-featured transactional databases at each edge node. These would be located in every PoP (or at least geographically close to it) and available to the application runtime. Data would be persisted across user sessions and available to all users of the application. That application data could be shared across PoPs, with an expectation that state would be eventually consistent between nodes.

A requirement for strong consistency increases the complexity of the system by an order of magnitude. Clustered data storage systems like Cassandra have wrestled with various algorithms to ensure that data is synchronized to all nodes. There are different tactics to address consistency. Other approaches involve a form of data replication. Some providers are researching new data storage methods, like CRDTs, which sidestep challenges around ordering of data updates by supporting only data structures that support consistency in all cases.

Regardless of the approach, data access patterns should be familiar to developers. Caches are commonly used in central data centers to prevent heavy database queries. Examples are Redis and Memcached. These are generally structured as key-value stores. These systems provide client library code in many programming languages that make the process of reading and writing key values very easy – abstracting away any underlying complexity of setting a unique key, checking for stale data, triggering a refresh, etc.

For full-fledged databases at the application edge, the ideal is standard support of CRUD actions through SQL. Most developers are familiar with relational databases, or document-based data stores like MongoDB. In either case, the developer should be able to expect access libraries provided for their language of choice and adherence to common data access patterns.

For all of these solutions, data residency can add another layer of complexity and requirements. As governments and user organizations stipulate a preference for keeping user data within a geographical boundary, data distribution strategies to geographically bound PoPs suddenly move from the realm of application performance optimization to core requirement. This also argues for permanent data storage in each PoP, versus a cached approach. This is because data in a cache would eventually need to be written to a central store in order to persist across user sessions. If that central store is in another country, then the residency requirement is broken.

Currently, data residency requirements are manageable, because the number of countries with active regulations is limited to the EU and China. A few other countries, like India and Brazil, are considering it. This level of adoption is manageable because a central data center could be established in those countries and the application instance would be constrained to that geographical boundary. However, if these regulations proliferate and every country adopts them, then distributed data storage will quickly become mainstream. Maintaining an isolated copy of every application and its data in each country would be difficult to manage. Utilizing a serverless, distributed system of compute with localized, permanent storage, would offer a much better solution architecturally.

What does a Provider Need?

In order to offer a solution for localized distribution of application data, a provider needs a few capabilities. These assume they already have a global network of PoPs connected by a SDN.

- Data Storage. Obviously, the basic capability is to have a place to store data. This requires a large amount of memory (for caches) and fast disk (for long-term storage). There should be enough capacity planned to accommodate data usage for all customers. Capacity planning becomes more stringent than for centralized data center storage providers because data storage is duplicated across all PoPs. Depending on the expectation set with customers for durability of their data, the provider would need to set up algorithms for purging older data to create space for newer data when needed.

- Consistency Methods. If the provider sets an expectation that application data is available across PoPs, they will need mechanisms to distribute copies of data. They also need to have methods to address eventual consistency or enforce strong consistency. Each has trade-offs in access latency and durability.

- Localization Controls. The provider should have have capabilities to keep application data within geographic boundaries when required. This implies a mapping of PoPs to each country and methods to override user-to-PoP assignments based on country borders and not geographical distance (closest PoP to a user may be across a border). In the event of a network or PoP failure, the same logic should be enforced, overriding standard re-routing algorithms.

- Developer-friendly Interfaces. As providers set up distributed data stores and make them available to application developers, the data access patterns and tooling should be familiar. If the data store is a key-value store, the developer should have access to a code library that abstracts the mechanics of setting and updating key values. If a full-featured database is available to the developer, the provider should mirror well-understood patterns for data access, like SQL support.

Identity

What is the Change?

In response to concerns around user privacy, one of the more recent trends is decentralization of identity. In this context, the ownership and distribution of user data is moving from multiple copies managed in centralized enterprise stores to a single copy managed by each user. Rather than establishing a duplicate copy of login credentials, preferences and personally identifiable information (PII) with each digital business, the user would manage a private, encrypted copy of their own data and share that with each digital channel as needed. Identity would be established through a single, consistent authentication method. The user could share data needed by each enterprise on a temporary basis. Enterprises would be prohibited from storing identifiable user data permanently.

In this way, identity management would be distributed to the users themselves. A user’s identity might be established initially by government policy, and then built up with other third-party credentials. User reputation would accumulate over time and verifiable credentials would allow commercial parties to assess risk based on the depth of user reputation available. The key distinction from today’s model is that the user would be in control of what credentials they assemble and the depth of data they are willing to share.

One emerging standard that addresses these goals is Decentralized Identifiers (DIDs). These enable verifiable identity of any subject (person, organization, etc.) in a way that is decoupled from centralized registries or identity providers. DIDs can be established for an individual, allowing them to engage with any Internet service with a single identity over which they have full control. The individual can decide the level of personal information to share with each application.

DIDs begin life with no evidence of proof, representing an empty identity. They can then be tied to an owner who can prove possession of the DID. DIDs can build up legitimacy through attestations from trust providers like governments, educational institutions, commercial entities and community organizations. Any of these endorsements could be independently verified. By accumulating attestations from multiple trust systems over time, an identity can build up enough proof of its legitimacy to enable it to surpass whatever risk level is established by an app or service.

As an example, the identity of Jane Doe might be established at birth by her government. She would be assigned a cryptographically secured wallet, into which all identity information would be stored. As she aged, additional attestations from educational institutions (test scores, degrees, other credentials), financial organizations (credit scores, loan repayment, accounts) or civic organizations (membership) might all be collected. Jane would also be able to keep personal information (name, address, phone, preferences ) up to date. Finally, she could set up different profiles that would be shared with online services based on context. Twitter might get a pseudonym with limited information, whereas her bank would have access to her full identity.

What does a Provider Need?

In order to support a decentralized identity service, a provider would need to support several components of the DID service framework. As a software infrastructure platform, their solutions would primarily enable applications to interact with these new identity services and provide network connectivity to use them for authentication. Since these standards are emerging, providers have latitude to determine what services they plan to offer.

- Identity Hub. A provider might host a version of an identity hub. This is a replicated database of encrypted user identity stores. These facilitate identity data storage and interactions. They also allow authentication with other systems like applications and network access. Network providers might maintain a purpose-built version of an identity hub on behalf of their enterprise network customers to facilitate Zero Trust.

- Universal Resolver. A universal resolver is a service that hosts DID drivers and provides a means of lookup and resolution of DIDs across systems and applications. Upon authentication, the resolver would provide an identity object back to the requestor which contains metadata associated with the DID. A provider might host a version of the universal resolver for its customers.

- User Agent. User agents are applications that facilitate the use of DIDs for end users. This might not represent a consumer service, but could be a software application provided to enterprise customers for their employees to utilize. This generally takes the form of a cryptographically signed, wallet-like application that end users utilize to manage DIDs and associated identity data.

- Decentralized applications and services. Since providers offer development and runtime environments for application builders, they could host applications or services on behalf of decentralized organizations. These might interact with end users through services supported by providers that interface with end users through public DID infrastructure.

Web 3.0 and Dapps

I realize that the term decentralization is sometimes associated with new developments around decentralized apps (dapps) and Web 3.0. My use of decentralization focuses primarily on the associated changes in software delivery architecture, data storage and network infrastructure, versus considerations for token economics, autonomous organizations and distributed ownership. Putting aside business models, I focus on the technology needed to deliver the next generation of digital experiences at scale.

As current software infrastructure providers evolve to support a more decentralized application architecture and network configuration, many of the patterns employed overlap with Web 3.0 concepts like edge computing and distributed data structures. The development of large parts of decentralized applications could be supported by existing software infrastructure providers, whether through existing products or a future incarnations.

In fact, many businesses that are blockchain-related employ software services from existing infrastructure providers. As an example, the decentralized exchange dYdX utilizes the Ethereum blockchain to record transactions. The vast majority of activity on the exchange is stored in the order book, which is processed by the matching engine. The order book records all the offers to buy or sell a cryptocurrency and the matching engine generates the actual purchase transactions. Due to the type of activity on the exchange (derivatives, options), a subset of all orders actually result in a transaction which is recorded on the blockchain.

In an interview on Software Engineering Daily, the founder of dYdX discussed the technology platform behind the exchange in detail. He mentioned that the order book and matching engine, which handle over 99% of the activity on the exchange, are hosted on AWS and utilize PostgreSQL as the data storage engine. While the final transaction is recorded on Ethereum, the rest of the application utilizes existing software infrastructure.

The Serverless Chats Podcast offers a wonderful resource on the state of serverless computing and touches on many concepts of both distributed and centralized implementations. In a recent show, the host interviewed the Developer Relations Engineer at Edge & Node, which is the company behind The Graph. The Graph is an indexing protocol for querying blockchain networks. Data collected can then be exposed through open APIs for dapps to easily access. Edge & Node also builds out decentralized apps themselves.

During the discussion, the two were hypothesizing about practical use cases for Web 3.0 technologies and the flavors of applications that would be suitable for this application infrastructure, at least in the near term.

To me when I think about building apps in Web2 versus Web3, I don’t think you’re going to see the Facebook or Instagram use case anytime in the next year or two. I think the killer app for right now, it’s going to be financial and e-commerce stuff.

But I do think in maybe five years you will see someone crack that application for, something like a social media app where we’re basically building something that we use today, but maybe in a better way. And that will be done using some off-chain storage solution. You’re not going to be writing all these transactions again to a blockchain. You’re going to have maybe a protocol like Graph that allows you to have a distributed database that is managed by one of these networks that you can write to.

Serverless Chats Podcast, Episode 106

Some of the emerging concepts in decentralized identity management are very interesting and likely have near term broad application. As discussed earlier, Decentralized Identifiers (DIDs) enable verifiable identity of any subject (person, organization, etc.) that is decoupled from centralized registries or identify providers. Not wanting to miss out on this trend, Microsoft is building an open DID implementation that runs atop existing public blockchains as a public Layer 2 network. This will be integrated into their Azure Active Directory product for businesses to verify and share DIDs.

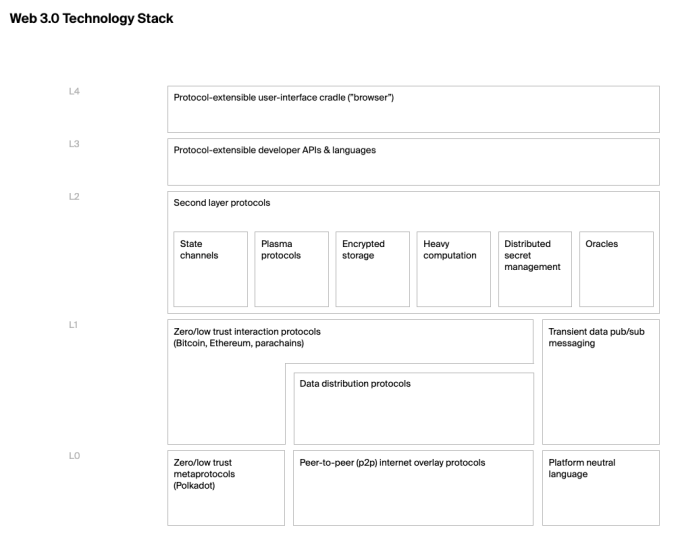

Like Microsoft, other existing software infrastructure providers can participate in the evolution of Web 3.0, dapps and blockchain-based networks. Fundamentally, the same arguments for decentralization of compute, data and network flows align with the trends we have been discussing in this blog post. The Web3 Foundation published a useful block diagram of the Web 3.0 technology stack, depicting the different layers upon which a decentralized app could be built. These are labeled in generic software component terms.

Abstracted out in this way, many of these higher-level software services could be offered by existing technology companies. As an example, DocuSign saw an opportunity early to provide support for blockchain. In 2015, they worked with Visa to create one of the first public prototypes for a blockchain based smart contract. DocuSign is currently a member of the Enterprise Ethereum Alliance, and in June 2018, announced an integration with the Ethereum blockchain for recording evidence of a DocuSigned agreement.

A similar argument can be made for Cloudflare. Their mission is to “help build a better Internet”. With over 200 PoPs (data center locations) spread across 100 countries, they can generically be considered as a high capacity distributed network. In a recent interview on alphalist.CTO, Cloudflare’s CTO, John Graham-Cumming, described the function of his team as focusing on future technologies and innovation for Cloudflare.

On the podcast, the CTO mentioned they had been examining the function of Ethereum and blockchain-enabled networks, and what opportunities may exist to align these with Cloudflare’s basket of software services. Along these lines, Cloudflare announced in April that they had added support for NFTs to their video Stream product. With their product development momentum, developer centric motion and financial position, it wouldn’t be a stretch to see Cloudflare expand their offerings to address other aspects of Web 3.0 software infrastructure and tooling. Many of the architectural advantages of dapps, like isolation, autonomy and data distribution, are characteristics of serverless edge computing.

In fact, a smart contract could be generically viewed as a distributed serverless function. It is created using a programming language and compiled into bytecode that runs on a virtual machine. The code applies conditionals, invokes loops, specifies actions and updates state. This code is executed across a distributed network of processing nodes that respect multi-tenancy. These are characteristics of edge compute solutions provided by Cloudflare and Fastly.

One of the future directions for optimizing the Ethereum network involves updating the Ethereum Virtual Machine (EVM). One of the proposals that appears to be gaining steam is to migrate the runtime for Ethereum transactions to be based on WebAssembly (WASM). This flavor of virtual machine is being referred to as eWASM, basically Ethereum’s transaction framework based on WebAssembly. eWASM provides several benefits over EVM, including much faster performance and the ability to utilize multiple programming languages for smart contracts.

The reason I find this interesting is that several serverless edge networks utilize WebAssembly as the runtime target. These products include Cloudflare Workers and Fastly’s Compute@Edge. In the case of Fastly, they further optimized the WASM compiler and runtime for extremely high performance, released as the open source project Lucet. Lucet claims to the best performing WASM runtime available. I mention these examples because if Ethereum moves its virtual machine to be WASM based, then software providers like Cloudflare and Fastly may be able to extend their existing WASM tooling to offer services to the smart contract ecosystem.

I think decentralized applications and infrastructure have a lot of promise. The leading technology providers today support many of the underlying architectural concepts and could offer software services to address them. As with dYdX, these new business models should generate incremental usage for existing infrastructure providers, not less.

Leading Players

Since this is a blog primarily about investing, I want to make these decentralization trends actionable for investors. To do that, I will apply them to three independent leaders in secure, distributed networking and compute. These publicly traded companies are Fastly (FSLY), Zscaler (ZS) and Cloudflare (NET). I will also use this opportunity to provide a review of their most recent quarterly results and how I am allocating my personal portfolio towards these stocks.

I realize that the space of edge compute, networking and security is broad, and that many larger technology providers, including the hyperscalers, are converging on this space. My thesis is that smaller, nimble independent providers will be able to carve out a meaningful share of the market due to their focus, product velocity and appeal to talent.

Fastly (FSLY)

Fastly started as a CDN provider, but always had aspirations to offer a full-fledged edge compute environment. Their network currently spans 58 locations across 26 countries. Each location can contain one or more PoPs, as represented by their “Metro PoP” strategy for high density areas, like Southern California.

Early on, Fastly established a strategy of building fewer, more powerful PoPs at select locations around the world. By utilizing PoPs with large storage capacity, they achieve high cache hit ratios for content requests. This can result in better performance for their customers, as a cache miss can cause much more overall latency as the request routes all the way back to origin. They also maintain high throughput on their network, with 130 Tb/sec of capacity available, triple the value at IPO in 2018.

Because of this focus on performance, Fastly built up a strong stable of marquee digital native customers. The majority of their revenue is still derived from content delivery, although they also offer security products focused on application delivery continuity. These include DDOS, bot mitigation and WAF/RASP. Their application security capabilities were solidified by the 2020 acquisition of Signal Sciences.

Besides the addition of a full suite of application security services, the big opportunity for Fastly is to leverage their interconnected network of PoPs to deliver distributed application processing and data services. These are encapsulated in Fastly’s serverless edge compute offering, called Compute@Edge. Fastly has been working on this platform for several years and moved it into Limited Release in Q4 2020.

As Fastly was designing their edge compute solution, they evaluated existing technologies for serverless compute, like re-usable containers. However, they found the performance and security capabilities to be lacking. They ultimately decided to leverage WebAssembly (Wasm) which translates several popular languages into an assembly language that runs very fast on the target computer architecture.

WebAssembly was spawned as a browser technology, which has been recently popularized for server-side processing. At the time, the common method of compiling and running WebAssembly was to use the Chromium V8 engine. This results in much faster cold start times than virtual containers, but is still limited to about 3-5 milliseconds. V8 also has a smaller, but non-trivial, memory footprint than a container. In the end, Fastly wanted full control over their environment and decided to build their own compiler and runtime, optimized for performance, security and compactness.

This resulted in the Lucet compiler and runtime. Fastly has been working on this behind the scenes since 2017. Lucet compiles WebAssembly to fast, efficient native assembly code, which enforces safety and security. Fastly open sourced the code and invited input from the community. The was done in collaboration with the Bytecode Alliance, an open source community dedicated to creating secure software foundations, building on standards such as WebAssembly. Founding members of the Bytecode Alliance are Mozilla, Red Hat, Intel and Fastly. Microsoft and Google recently joined as well, adding more industry clout to the movement.

Lucet supports WebAssembly, but with its own compiler. It also includes a heavily optimized, stripped-down runtime environment, on which the Fastly team spends the majority of their development cycles. Lucet provides the core of the Compute@Edge environment. As an open source project, customers can choose to host it themselves.

In December 2020, Shopify did just that. They added a new capability to their core platform that allows merchants to create custom extensions to standard business rules. These can be coded by the merchant or partner, but are run in a controlled environment within the Shopify platform. This approach allows Shopify to ensure the code is executed inline, but in a fast, isolated environment.

For this platform capability, the Shopify team chose to use Lucet. Shopify selected this architecture for the same reasons that Fastly decided to build it themselves. While most serverless compute runtimes leverage the V8 Engine, Fastly’s Lucet runtime is purpose-built for fast execution, small resource footprint and secure process isolation. Lucet generally exceeds V8 performance along these parameters.

In ecommerce, speed is a competitive advantage that merchants need to drive sales. If a feature we deliver to merchants doesn’t come with the right tradeoff of load times to customization value, then we may as well not deliver it at all.

Wasm is designed to leverage common hardware capabilities that provide it near native performance on a wide variety of platforms. It’s used by a community of performance driven developers looking to optimize browser execution. As a result, Wasm and surrounding tooling was built, and continues to be built, with a performance focus.

Shopify Engineering Blog, dEc 2020

This choice by Shopify validates Fastly’s architecture design and general approach. Keep in mind that Shopify could have chosen to utilize the V8 Engine for the same workload. However, they favored the near instant cold start time of 35 micro-seconds and the smaller footprint offered by Lucet. Additionally, they decided to support AssemblyScript as the programming language for partners to utilize for this environment. This is also one of the first-class languages for Compute@Edge. Shopify plans to invest engineering resources in building out new language features and developer tooling for AssemblyScript. This work will in turn help Fastly, as AssemblyScript has become core to Fastly’s strategy of catering to JavaScript developers.

For more background on Fastly’s edge compute solution, I covered it extensively in a prior post. When compared to other serverless, distributed compute runtimes (specifically the V8 Engine), Fastly’s solution has the following distinguishing features:

- Performance. As Shopify’s feedback indicates, Lucet and Fastly’s Compute@Edge platform have the best performance of any serverless runtime environment. This is measured in cold start times (100x faster) and memory footprint (1,000x smaller).

- Language Support. However, this performance comes with a major drawback. Lucet only supports languages that can be compiled into WebAssembly. Currently, this includes Rust, C, C++ and AssemblyScript. This leaves out a number of popular languages that are supported by other runtimes, most notably Javascript. While seemingly downplaying the need to support JavaScript initially, the Fastly team has now prioritized development to add support for it.

- Operating System Interface. While Lucet runs WebAssembly code in a secure sandbox, there are potential use cases that would benefit from having access to system resources. These might include files, the filesystem, sockets, and more. Lucet supports the WebAssembly System Interface (WASI) — a new proposed standard for safely exposing low-level interfaces to operating system facilities. The Lucet team has partnered with Mozilla on the design, implementation, and standardization of this system interface.

- Security. Because of super fast cold start times, each request is processed in an isolated, self-contained memory space. There is only one request per runtime and data residue is purged on conclusion. The code compiler ensures that the code’s memory access is restricted to a well-defined structure. Lucet employes deliberate memory management to prevent cross-process snooping.

For languages in particular, because Fastly chose to build their own runtime in Lucet (versus leveraging the V8 engine, like most competitors), Compute@Edge will only run languages that can be compiled to produce a WebAssembly binary. This greatly reduces the language set available (at least currently), relative to the V8 engine. The V8 engine can also run JavaScript, which expands the set of candidate languages greatly, as many popular ones have an available compiler to JavaScript. Fastly’s Chief Product Architect explained this design choice for Compute@Edge in a past blog post.

During the Altitude user conference last November, Fastly’s CTO was optimistic that language support for WebAssembly will continue to expand in the future. In fact, when asked a question about the 5 year vision for Compute@Edge, he felt that all popular languages will eventually support WebAssembly as a deployment target. This will significantly lower the adoption barrier for less experienced developers.

Distributed Data Storage

While Fastly’s development of a highly performant serverless edge compute platform has been notable, their public progress on a distributed data store has been slower. They are developing a fully distributed data storage solution as part of their Compute@Edge platform. On a recent podcast on Software Engineering Daily, Fastly’s CTO said the founders first wanted Fastly to provide a distributed data service from their POPs. But, they soon realized this would be more relevant to developers if it could be referenced from a compute runtime. This caused them to prioritize building out the Compute@Edge solution first, before tackling data storage.